Prompt engineering is the art and science of crafting inputs (called prompts) to get optimal outputs from AI models like ChatGPT, DALL·E, or Codex. It’s especially important when working with large language models (LLMs) because their behavior is highly sensitive to how you phrase your request.

What is Prompt Engineering?

At its core, prompt engineering is about:

- Understanding the model’s behavior

- Designing precise, clear, and structured prompts

- Iterating and refining prompts to achieve better, more reliable results

When is Prompt Engineering Useful?

Prompt engineering can significantly improve outcomes in tasks like:

- Content creation (blogs, marketing copy)

- Code generation and debugging

- Summarization or translation

- Data analysis and transformation

- Design and image generation

- Education and tutoring

- Chatbot design

- Automating workflows

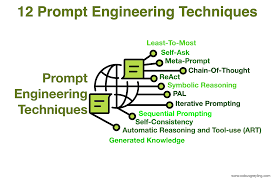

Core Techniques of Prompt Engineering

1. Be Specific and Clear

✅ Good:

“Write a 100-word blog introduction about the benefits of meditation for busy professionals.”

🚫 Bad:

“Write about meditation.”

2. Give Examples (Few-shot Learning)

You can show the model a few examples of what you want:

txtCopyEditInput: "I’m hungry"

Output: Emotion: Hunger

Input: "I miss my family"

Output: Emotion: Loneliness

Input: "I can't wait for the weekend!"

Output:

3. Role Prompting (Set a Persona or Role)

You can ask the model to act as someone:

“You are a professional CV writer. Improve the following CV summary to be more impactful and concise.”

4. Chain-of-Thought Prompting (Step-by-Step Reasoning

Ask the model to reason through a problem in steps.

“Solve this math problem step by step: A train travels 300 km at 60 km/h. How long does it take?”

5. Instructional Promptin

Be direct and use commands:

“Summarize this article in three bullet points.”

“List five benefits of regular exercise.”

6. Using Constraints

Add constraints like length, format, or style:

“Generate a 2-line poem about the sea in the style of Shakespeare.”

7. Zero-shot vs. Few-shot vs. CoT

| Type | Description | Example |

|---|---|---|

| Zero-shot | No examples; straight instruction | “Translate ‘hello’ to French.” |

| Few-shot | Provide examples in the prompt | See emotion detection example above |

| Chain-of-thought | Ask the model to think step-by-step | “Explain how you got to your answer step-by-step.” |

🧪 How to Use Prompt Engineering in Practice

Tools You Can Use:

- ChatGPT / GPT-4 / GPT-4.5 (this platform)

- Craft prompts directly in the chat.

- Use the “Custom Instructions” feature for persistent context.

- OpenAI Playground

- Offers temperature, top-p, frequency penalties — great for testing.

- Notebooks (Python + OpenAI API)

- Use

openaiPython SDK to automate and test prompt variations.

- Use

- Tools like LangChain, LlamaIndex, or RAG setups

- Use prompts dynamically in apps that retrieve and augment knowledge.

💡 Best Practices

- Start simple, then iterate

- Use delimiters (like

""",---) to separate instructions and inputs - Test multiple variants of prompts

- Save and document your best prompts

- Measure output quality for consistency and reliability

📘 Example Prompt Recipes

Summarizer Prompt:

“Summarize the following text in 3 bullet points: \n\n[TEXT]”

Email Writer Prompt:

“Write a professional follow-up email to a client named Sara, reminding her about our meeting scheduled on Friday.”

Code Explainer Prompt:

“You are a senior software engineer. Explain what this code does in plain English: \n\n[CODE]”

1 thought on “The Art of Prompt Engineering”